AI in Verification

Artificial Intelligence has proven to be a powerful tool for analyzing large volumes of data, making it an important asset for future arms control verification regimes. This chapter explores potential consequences of AI for verification. We find that the availability of proper training data for verification purposes can pose a challenge that needs to be addressed by AI engineers building models for verification. The explainability of AI results – i.e. the reasoning behind outputs – must be addressed by developers and practitioners to ensure sound verification assessments and to provide inspectors insights into the potential and limits of decision-supporting AI models. To create trust and acceptance for AI-aided verification and monitoring, AI verification systems should be developed and tested jointly with all treaty parties. Training data and models should be shared among parties, where possible, to foster transparency and enable independent validation.

The last several years have seen a continuous increase in the amount of data available for verifying non-proliferation and arms control commitments and for monitoring relevant activities. On the one hand, this concerns data sources that are already used for such purposes today. An increase in the use of different measurement sensors can be observed, especially for novel remote monitoring applications. For example, the International Atomic Energy Agency (IAEA) operates more than 1,300 surveillance cameras which contribute to reducing on-site inspections while maintaining a continuity of knowledge over nuclear material.1 The Comprehensive Test Ban Treaty Organization (CTBTO) operates monitoring stations across the globe to detect prohibited nuclear weapons tests. Data, such as seismic or radionuclide measurements, is continuously recorded by 321 stations and the data streams are constantly evaluated.2 Furthermore, commercial satellites can image an arbitrary point on the earth at least every few hours.3 On the other hand, the question arises as to which extent the staggering amount of open-source information – including from social media – could be exploited in the future for verification and monitoring objectives. This includes heterogeneous data, such as text, pictures, audio, and video.

Analyzing the growing amounts of data used in today’s verification and monitoring contexts is already a demanding task, given the limited number of inspectors and analysts. Expanding the amount of data used for verification and monitoring will therefore require computational support. This is especially the case where data is heterogeneous, unstructured and complex. This chapter discusses Artificial Intelligence as a tool for analyzing data in the international security and peace context. Can it become a reliable and trusted verification and monitoring tool?

AI will likely become relevant for multiple areas of arms control.4 Different fields make use of common data sources, such as satellite imagery, and share similar data analysis challenges. Beyond current treaty-based verification regimes, AI applications can be relevant also in broader areas, such as ceasefire monitoring, peacekeeping operations, or open-source societal verification. Indeed, some of the first AI applications stem from these areas.

Verification-Relevant Data

Data can be generated with and without the intention of being used for verification. Instrument-driven data on the one hand – such as technical measurement or surveillance data – has always been used for inspections, but range and volume of such data has increased with new remote monitoring approaches. Such data primarily serves the purpose of assessing compliance with arms control obligations. The collection of measurement data can be tested and adapted in laboratories and other controlled environments and measurement devices can be designed and gauged for the purpose.

On the other hand, an increasing amount of open-source data has recently become available across a range of media. While the purpose of this data is not originally related to verification, it may still contain information useful for this purpose. The data, usually in the form of text, image, audio, and video files, is often freely accessible on the internet – e.g. in the form of social media posts – or needs to be digitized – e.g. newspaper articles or radio transmissions. Produced almost everywhere in the world, this data can be relevant for verification because it may reveal undeclared facilities or activities. However, while readily available, the data is unstructured and the origin of the data poses several challenges for analysis, such as different languages and data formats, exceptionally large volumes, and limited possibilities for verifying credibility.

It must be emphasized that it is not currently known how relevant open-source data is for verification purposes. Comprehensive screenings of this data could be considered excessive and raise questions of proportionality. It would most likely not be accepted as a basis for compliance assessments, but rather for less formal monitoring purposes. Their purpose could be to obtain indications that could then trigger formal verification activities, e.g. by helping to determine when and where to conduct on-site inspections. If effective, such procedures could possibly reduce the number of on-site inspections.

AI-Aided Data Analysis

AI is especially suitable to deal with large and diverse data sets and can identify patterns and correlations. Several AI-based analyses exist that could be adapted for verification or monitoring activities. For instance, approaches exist where AI is used to cluster and label social media posts in crisis scenarios,5 which could be adapted to identify ceasefire violations or to identify rising tensions and imminent clashes between armed groups. In a nuclear-related study, the ability of AI systems to analyze different forms of data simultaneously was used to scan texts, images, audio, and video files to monitor nuclear proliferation activities by querying the AI to identify data associated with devices for uranium enrichment.6 Another study developed a field-deployable AI to identify chemical warfare agents with colorimetric sensors.7 Further AI applications for verification are described in the annex to this chapter.

As a tool for verification, the output of an implemented AI needs to be linked to a clear verification objective. We distinguish between selective and probabilistic outputs of AI applications. A selective output ideally is a specific subset of the input data that might contain verification-relevant information, such as indicators of non-compliance. A human analyst must review and assess the actual importance of the identified data. It is unwarranted that the AI would draw any definitive conclusions but would instead aid human inspectors focus on the particular data that inform their assessments. An AI could, for example, identify images that show treaty-prohibited items, or highlight suspicious activities. In the context of sensor data, a probabilistic output is a direct assessment, i.e. a derived quantity. The AI in question could e.g. determine the location from which a shell has been fired during a ceasefire, or a likelihood that a certain operation is taking place. In these cases, the AI takes on a more direct role in drawing conclusions.

Challenges

Training Data

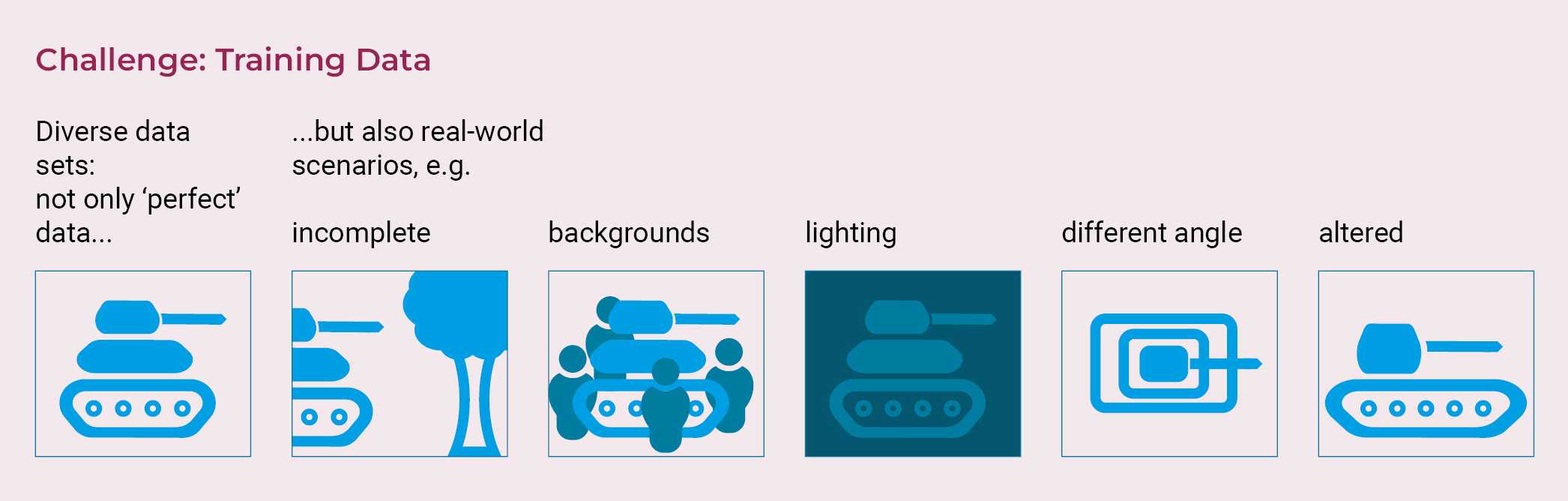

An important challenge is the training data for the AI. The quality, diversity and unbiasedness of the training data set is a decisive factor for the quality of the model. In general, training data sets need to be large enough and contain enough data that is similar to the expected real-world scenarios under observation. For instance, if an AI is tasked with identifying images of various weapons, the training set should include varied images – e.g. different angles, lighting, backgrounds, and partial or altered objects. Obtaining this kind of data can be a tedious endeavor and can become even more challenging if constantly updated data sets are required to adapt to dynamic developments. The required data can be scarce or non-existent. It is possible to increase the data set size by augmenting existing data8or create data sets with synthetic computer-generated data. However, a model trained on such data might not be robust – that is, it could perform poorly when applied on real-world data. Both real-world and computer-generated training data can suffer from inherent biases that may be adopted by AI models in their training.

Another challenge primarily associated with human communication data is unlabeled data. This consists of inputs without accompanying descriptions or classifications, making it harder for AI to learn what each input represents. For instance, social media images usually do not have labels of what is visible in the picture. Manually labeling this data can be prone to errors and would be tedious, so technical approaches would need to be leveraged, such as using a small manually labelled data set to “fine-tune” a model that has already been trained on a similar task, i.e. “transfer learning”.9 All these means to tackle challenges of training data sets can result in unexpected AI outcomes when applied to real-world data, thus validating AI results with real-world data is an important part of the development process.

Confidence and Explainability

The explainability of AI results is a general issue, but is especially important in verification. Unlike “traditional” analysis techniques, AI models perform calculations with millions of fine-tuned parameters, rendering it difficult or impossible to comprehend which data features were most significant for determining the results. Can one be sure, for instance, that the selected data shown to analysts is representative? Can we be sure that no data is filtered out that could give counter-indications? In a verification scenario, especially in cases of alleged non-compliance, it is imperative to be able to explain how inspectorates come to their final conclusions. In formal arms control settings, mutual confidence in the validity of the results of the AI must exist before it can be incorporated into a verification regime. Even in informal settings, international acceptance of assessments involving AI models is contingent on being confident in the AI’s reliability.

There are several possibilities towards addressing this issue. First, explainable AI (“XAI”) approaches should be leveraged to bring some light into the “black box” of AI. The XAI field has grown significantly over the past years and techniques such as “attribution”, which highlights the most important aspects of the input data for the AI’s output, have been developed.10 Nevertheless, many open research questions remain in this field; as of today, XAI cannot address all explainability issues.

Second, research and development of AI, especially models and training data, must be as transparent as possible to all parties to foster trust in the AI’s results and therefore build the necessary basis for AI to be an accepted asset in international agreements. Verification regimes would require codified procedures regarding data collection, training data selection, AI model design and training, testing and validation. In order to generate the necessary trust in the AI models by all parties, a generally high level of transparency is required. At best, this entails sharing training data and models, as well as joint international development of AI across all stages. Also, insights about shortcomings and limitations of the model and potential failures need to be disclosed. If this is not ensured, results by the AI are prone to being rejected by the parties, or may even make AI an exploitable weak spot, allowing parties to question the reliability of the verification regime in general.

However, some stakeholders may express reservations against comprehensive transparency. States may refuse to share data deemed sensitive or dual-use relevant. Fully transparent AI models could enable adversarial attacks, which are subtle almost incomprehensible changes of input data that are able to fool the model. The concerns regarding data confidentiality and integrity of AI models must be subject to debate before AI is considered part of verification regimes. Nevertheless, any solution emerging from such discussion cannot discard the maxim of far-reaching transparency – otherwise, AI is unlikely to be regarded as an accepted and trusted technique for verification.

Humans and Machines in Decision Making Processes

The introduction of AI into verification processes inevitably cedes parts of the decision-making to the AI system, even where the AI merely assists inspectors, who continue to draw their own conclusions. Human analysts have limited control over the calculation and reasoning of the AI, which however produces information on which analysts rely. This is true both for probabilistic and selective outputs, in which analysts only see the partial data which may be biased. All AI approaches share the consequence that the final assessment made by humans is not based on the complete original data set presented to the AI, but rather on a pre-processed and filtered one.

To use AI responsibly and accountably in verification, questions about the roles of and interactions between humans and machines must be the subject of discussion. For instance, there is already a discourse about ethical AI guidelines for applications in IAEA safeguards.11 Even though humans may make a final decision, trust in the decision-supporting AI has been identified as a crucial issue in general.12 When humans review or analyze data directly, they can profit from expert intuition and contextual insights. When AI takes over the data processing step, these “soft” judgement capabilities are difficult to maintain.

Conclusion

AI techniques will very likely play a role for future verification and monitoring. The amount of data is increasing rapidly in availability and has already been successfully analyzed with AI in other contexts, making AI an indispensable tool. Trust in the AI tools used during verification is crucial because drawing conclusions about compliance is the goal of verification and is not only a technical but also a political process. This is particularly relevant, given that the use of AI in verification necessarily means that inspectorates have less independent control.

Approaches to deal with this and build trust in AI methods are technical and procedural in nature. On the technical side, XAI techniques can be applied, which can to some extent explain how input information resulted in a specific assessment. Procedurally, AI for verification must be planned, developed, and tested in a transparent manner to build confidence in the AI’s results, preferably in international field exercises using real-world data for validation. At the same time, a dialogue on handling sensitive data and ensuring model integrity is necessary to enable openness for comprehensive transparency. This debate must be conducted jointly, involving all stakeholders and prior to the incorporation of AI into any verification regime.

Stakeholders should allocate resources now, as AI capabilities advance rapidly and building sufficient confidence in AI technology will require a considerable effort over a long time. Because catching up later may prove difficult, states that wish to shape future international security agreements must engage early.

- IAEA. (2023). IAEA Annual Report 2023. https://www.iaea.org/sites/default/files/gc/gc68-2.pdf; Smartt, H. (2022). Remote Monitoring Systems/Remote Data Transmission for International Nuclear Safeguards. Sandia National Laboratories (SNL). https://doi.org/10.2172/1862624 ↩

- CTBTO. (n.d.). International Data Centre. Abgerufen am 31. Juli, 2025, unter https://www.ctbto.org/our-work/internationaldata-centre; CTBTO. (n.d.). The International Monitoring System. Abgerufen am 31. Juli 2025, unter https://www.ctbto.org/our-work/international-monitoring-system ↩

- Moric, I. (2022). Capabilities of Commercial Satellite Earth Observation Systems and Applications for Nuclear Verification and Monitoring. Science & Global Security, 30(1), 22–49. doi:10.1080/08929882.2022.2063334 ↩

- Reinhold, T., & Schörnig, N. (Hrsg.). (2024). Armament, Arms Control and Artificial Intelligence. Springer Verlag. https://doi.org/10.1007/978-3-031-11043-6 ↩

- Bayer, M., Kaufhold, M.-A., & Reuter, C. (2021). Information Overload in Crisis Management: Bilingual Evaluation of Embedding Models for Clustering Social Media Posts in Emergencies. ECIS 2021 Research Papers.; PEASEC. (n.d.). Open Data Observatory. Abgerufen am 27. Mai 2025, unter https://peasec.de/projects/observatory/ ↩

- Feldman, Y., Arno, M., Carrano, C., Ng, B., & Chen, B. (2018). Toward a Multimodal-Deep Learning Retrieval System for Monitoring Nuclear Proliferation Activities. Journal of Nuclear Materials Management, 46(3). https://www.ingentaconnect.com/content/inmm/jnmm/2018/00000046/00000003/art00008 ↩

- Bae, S., Kang, K., Kim, Y. K., Jang, Y. J., & Lee, D.-H. (2025). Field-Deployable Real-Time AI System for Chemical Warfare Agent Detection Using YOLOv8 and Colorimetric Sensors. Chemometrics and Intelligent Laboratory Systems, 261, 105365. https://doi.org/10.1016/j.chemolab.2025.105365 ↩

- Shorten, C., & Khoshgoftaar, T. M. (2019). A Survey on Image Data Augmentation for Deep Learning. Journal of Big Data, 6(1), 60. https://doi.org/10.1186/s40537-019-0197-0 ↩

- Pan, S. J., & Yang, Q. (2010). A Survey on Transfer Learning. IEEE Transactions on Knowledge and Data Engineering, 22(10), 1345–59. https://doi.org/10.1109/TKDE.2009.191; Zoph, B., Ghiasi, G., Lin, T.-Y., Cui, Y., Liu, H., Cubuk, E. D., & Le, Q. V. (2020). Rethinking Pre-Training and Self-Training. arXiv.org. https://arxiv.org/abs/2006.06882v2 ↩

- Linardatos, P., Papastefanopoulos, V., & Kotsiantis, S. (2021). Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy, 23(1), 18. https://doi.org/10.3390/e23010018; Longo, L., Brcic, M., Cabitza, F., Choi, J., Confalonieri, R., Del Ser, J., Guidotti, R., Hayashi, Y., Herrera, F., Holzinger, A., Jiang, R., Khosravi, H., Lecue, F., Magieri, G., Páez, A., Samek, W., Schneider, J., Speith, T., & Stumpf, S. (2024). Explainable Artificial Intelligence (XAI) 2.0: A Manifesto of Open Challenges and Interdisciplinary Research Directions. Information Fusion, 106, 102301. https://doi.org/10.1016/j.inffus.2024.102301; Lopes, P., Silva, E., Braga, C., Oliveira, T., & Rosado, L. (2022). XAI Systems Evaluation: A Review of Human and ComputerCentred Methods. Applied Sciences, 12(19), 9423. https://doi.org/10.3390/app12199423; Simonyan, K., Vedaldi, A., & Zisserman, A. (2014). Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps. arXiv.org. https://doi.org/10.48550/arXiv.1312.6034 ↩

- Murphy, C., & Barr, J. (2023). Responsibly Harnessing the Power of AI. Consolidated Nuclear Security, LLC. https://www.osti.gov/biblio/2283006 ↩

- Bayer, S., Gimpel, H., & Markgraf, M. (2022). The Role of Domain Expertise in Trusting and Following Explainable AI Decision Support Systems. Journal of Decision Systems 32(1), 110–38. doi. https://doi.org/10.1080/12460125.2021.1958505 ↩